A Conditional Diffusion Model for Hand-drawing Generation

This homework implements an efficient CFG diffusion model that generates hand-drawing images conditioned on 12 class labels with only 6,586,369 model parameters and 100 diffusion steps.

Introduction

Given a hand-drawing image dataset containing the following categories: "airplane", "bus", "car", "fish", "guitar", "laptop", "pizza", "sea turtle", "star", "t-shirt", "The Eiffel Tower", "yoga", we aim to generate new hand-drawing images conditioned on these categories.

We adopt u-net based diffusion model to generate images. For conditioning, we use the Classifier-free Guidance technique.

Results

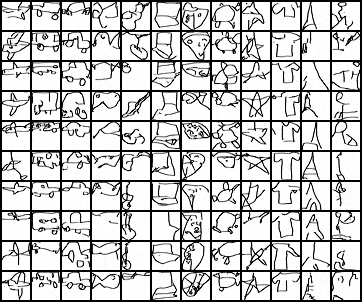

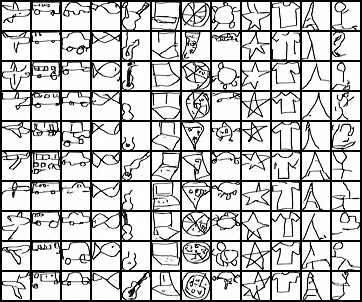

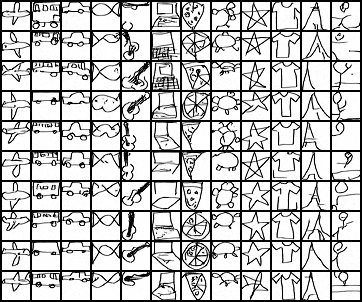

The generated results are shown below:

Guidance Scale = 0.5

Guidance Scale = 0.5  Guidance Scale = 2.0

Guidance Scale = 2.0  Guidance Scale = 5.0

Guidance Scale = 5.0

For more implementation details, please refer to the github page of this project.